Definition

of Network:

A computer

network is a set of computers or devices that are connected with each other to

carry on data and share information. In computing, it is called a network as a

way to interconnect two or more devices to each other using cables, signals,

waves or other methods with the ultimate goal of transmitting data, share

information, resources and services.

Purpose

of networking:

The purpose

of a network is, generally, to facilitate and expedite communications between

two or more instances on the same physical space or connected remotely. Such

systems also allow cost savings and time.

The most

known type of network is the Intranet, which is a private network that uses

Internet as a basic architecture in order to connect various devices. Internet,

however, is a technology that connects devices throughout the world, and that

is why it is called “network of networks.”

Classifications

of Networks:

The networks

are classified by range (personal, local, campus, metropolitan or wide area),

as well as by method of connection (cable, fiber optics, radio, infrared,

wireless, etc..) or by functional relationship (client – server or

peer-to-peer). Also in the topology field there is a classification to be aware

of (bus, star, ring, mesh, tree etc.) and directional (simplex, half duplex or

full duplex).

Use of a

network:

The use of a

network in an office, for example, in which all employees have the same access

to resources such as programs and applications or devices like a printer or

scanner. Moreover, configuring a large-scale network facilitates communication

among different geographic locations, so a company with multiple branches in

the world can keep in communication with its members in a simple and quick.

Finally, a network can be used as a home to share files or maximize the

available space.

Analog

Network Signaling:

An analog

signal is best compared to a wave. It

has similar properties to an ocean wave, and can be described using three

specific characteristics: amplitude, frequency, and wavelength.

To use the

ocean wave analogy an analog signal's amplitude is like the height of a wave

rolling in onto the beach. The frequency of an analog signal can be compared to

how fast the waves roll in. Wavelength

can be compared to the distance between one wave and the next wave. Wavelength is measured as the distance

between the peak of one wave and the next.

Advantages

and Disadvantages of Analog Signals

Analog

signals are variable and can convey more subtly than a digital signal. For example the human voice is analog, and

has more tone than a digital representation of the same voice. However, analog signals are very vulnerable

to interference from outside forces and other waves which can cancel them out.

Digital

Network Signaling:

A digital

signal is made up of on/off states.

Unlike the smooth curve of an analog wave, the digital signal cuts on

and off. This happens to perfectly fit

the type of communication inside a computer, which is made up of on/off states

as well.

Digital

signals are much more reliable than analog signals because they are less

vulnerable to interference and errors. However, digital equipment costs more

and is much more complex.

Modulation

(AM, FM, PM)

In

telecommunications, modulation is the process of conveying a message signal,

for example a digital bit stream or an analog audio signal, inside another

signal that can be physically transmitted. Modulation of a sine waveform is

used to transform a baseband message signal into a pass band signal, for

example low-frequency audio signal into a radio-frequency signal (RF signal).

In radio communications, cable TV systems or the public switched telephone

network for instance, electrical signals can only be transferred over a limited

pass band frequency spectrum, with specific (non-zero) lower and upper cutoff

frequencies. Modulating a sine-wave carrier makes it possible to keep the

frequency content of the transferred signal as close as possible to the centre

frequency (typically the carrier frequency) of the pass band.

A device

that performs modulation is known as a modulator and a device that performs the

inverse operation of modulation is known as a demodulator (sometimes detector

or demod). A device that can do both operations is a modem

(modulator–demodulator).

Amplitude

Modulation (AM)

Amplitude

modulation (AM) is a method of impressing data onto an alternating-current (AC)

carrier waveform. The highest frequency of the modulating data is normally less

than 10 percent of the carrier frequency. The instantaneous amplitude(overall

signal power) varies depending on the instantaneous amplitude of the modulating

data. In AM, the carrier itself does not fluctuate in amplitude. Instead, the

modulating data appears in the form of signal components at frequencies

slightly higher and lower than that of the carrier. These components are called

sidebands. The lower sideband (LSB) appears at frequencies below the carrier

frequency; the upper sideband (USB) appears at frequencies above the carrier frequency.

The LSB and USB are essentially "mirror images" of each other in a

graph of signal amplitude versus frequency, as shown in the illustration. The

sideband power accounts for the

variations in the overall amplitude of the signal.

When a

carrier is amplitude-modulated with a pure sine wave, up to 1/3 (33percent) of

the overall signal power is contained in the sidebands. The other 2/3 of the

signal power is contained in the carrier, which does not contribute to the

transfer of data. With a complex modulating signal such as voice, video, or

music, the sidebands generally contain 20 to 25 percent of the overall signal

power; thus the carrier consumes75 to 80 percent of the power. This makes AM an

inefficient mode. If an attempt is made to increase the modulating data input

amplitude beyond these limits, the signal will become distorted, and will

occupy a much greater bandwidth than it should. This is called over modulation,

and can result in interference to signals on nearby frequencies.

Frequency

Modulation (FM)

Frequency

modulation (FM) is a method of impressing data onto an alternating-current (AC)

wave by varying the instantaneous (immediate) frequency of the wave. This

scheme can be used with analog or digital data.

Analog

FM

In analog

FM, the frequency of the AC signal wave, also called the carrier, varies in a

continuous manner. Thus, there are infinitely many possible carrier

frequencies. In narrowband FM, commonly used in two-way wireless

communications, the instantaneous carrier frequency varies by up to 5 kilohertz

(kHz, where 1 kHz = 1000 hertz or alternating cycles per second) above and

below the frequency of the carrier with no modulation. In wideband FM, used in

wireless broadcasting, the instantaneous frequency varies by up to several megahertz

(MHz, where 1 MHz = 1,000,000 Hz). When the instantaneous input wave has

positive polarity, the carrier frequency shifts in one direction; when the

instantaneous input wave has negative polarity, the carrier frequency shifts in

the opposite direction. At every instant in time, the extent of

carrier-frequency shift (the deviation) is directly proportional to the extent

to which the signal amplitude is positive or negative.

Digital

FM

In digital

FM, the carrier frequency shifts abruptly, rather than varying continuously.

The number of possible carrier frequency states is usually a power of 2. If

there are only two possible frequency states, the mode is called

frequency-shift keying (FSK). In more complex modes, there can be four, eight,

or more different frequency states. Each specific carrier frequency represents

a specific digital input data state.

Phase

Modulation (PM):

Phase

modulation (PM) is a method of impressing data onto an alternating-current (AC)

waveform by varying the instantaneous phase of the wave. This scheme can be

used with analog or digital data.

Analog PM,

The phase of the AC signal wave, also called the carrier, varies in a

continuous manner. Thus, there are infinitely many possible carrier phase

states. When the instantaneous data input waveform has positive polarity, the

carrier phase shifts in one direction; when the instantaneous data input

waveform has negative polarity, the carrier phase shifts in the opposite

direction. At every instant in time, the extent of carrier-phase shift(the

phase angle) is directly proportional to the extent to which the signal

amplitude is positive or negative.

Digital

PM

In digital

PM, the carrier phase shifts abruptly, rather than continuously back and forth.

The number of possible carrier phase states is usually a power of2. If there

are only two possible phase states, the mode is called biphase modulation. In

more complex modes, there can be four, eight, or more different phase states.

Each phase angle (that is, each shift from one phase state to

another)represents a specific digital input data state.

Phase modulation

is similar in practice to frequency modulation (FM). When the instantaneous

phase of a carrier is varied, the instantaneous frequency changes as well. The

converse also holds: When the instantaneous frequency is varied, the

instantaneous phase changes. But PM and FM are not exactly equivalent,

especially in analog applications. When an FM receiver is used to demodulate a

PM signal, or when FM signal is intercepted by a receiver designed for PM, the

audio is distorted. This is because the relationship between phase and

frequency variations is not linear; that is, phase and frequency do not vary in

direct proportion.

Direction

of communication flow(Simplex, Halfduplex, FullDuplex)

In data

communications, flow control is the process of managing the pacing of data

transmission between two nodes to prevent a fast sender from outrunning a slow

receiver. It provides a mechanism for the receiver to control the transmission

speed, so that the receiving node is not overwhelmed with data from

transmitting node. Flow control should be distinguished from congestion

control, which is used for controlling the flow of data when congestion has

actually occurred. Flow control mechanisms can be classified by whether or not

the receiving node sends feedback to the sending node.

Flow control

is important because it is possible for a sending computer to transmit

information at a faster rate than the destination computer can receive and

process them. This can happen if the receiving computers have a heavy traffic

load in comparison to the sending computer, or if the receiving computer has

less processing power than the sending computer.

Data flow is

the flow of data between two points. The direction of the data flow can be

described as:

Simplex:

Data flows

in only one direction on the data communication line (medium). Examples are

radio and television broadcasts. They go from the TV station to your home

television.

HALF-DUPLEX

Half-Duplex:

data flows in both directions but only one direction at a time on the data

communication line. For example, a conversation on walkie-talkies is a

half-duplex data flow. Each person takes turns talking. If both talk at once -

nothing occurs!

Bi-directional

but only 1 direction at a time!

Full-Duplex:

Data flows

in both directions simultaneously. Modems are configured to flow data in both

directions.

Bi-directional

both directions simultaneously!

Simplex vs. Duplex

SIMPLEX

Simplex

communication is permanent unidirectional communication. Some of the very first

serial connections between computers were simplex connections. For example,

mainframes sent data to a printer and never checked to see if the printer was

available or if the document printed properly since that was a human job.

Simplex links are built so that the transmitter (the one talking) sends a

signal and it's up to the receiving device (the listener) to figure out what

was sent and to correctly do what it was told. No traffic is possible in the

other direction across the same connection.

You must use

connectionless protocols with simplex circuits as no acknowledgement or return

traffic is possible over a simplex circuit. Satellite communication is also

simplex communication. A radio signal is transmitted and it is up to the

receiver to correctly determine what message has been sent and whether it

arrived intact. Since televisions don't talk back to the satellites (yet),

simplex communication works great in broadcast media such as radio, television

and public announcement systems.

HALF

DUPLEX

A half-duplex

link can communicate in only one direction, at a time. Two way communication is

possible, but not simultaneously. Walkie-talkies and CB radios sort of mimic

this behavior in that you cannot hear the other person if you are talking.

Half-duplex connections are more common over electrical links. Since

electricity won't flow unless you have a complete loop of wire, you need two

pieces of wire between the two systems to form the loop. The first wire is used

to transmit, the second wire is referred to as a common ground. Thus, the flow

of electricity can be reversed over the transmitting wire, thereby reversing

the path of communication. Electricity cannot flow in both directions

simultaneously, so the link is half-duplex.

FULL

DUPLEX

Full duplex

communication is two-way communication achieved over a physical link that has

the ability to communicate in both directions simultaneously. With most

electrical, fiber optic, two-way radio and satellite links, this is usually

achieved with more than one physical connection. Your telephone line contains

two wires, one for transmit, the other for receive. This means you and your

friend can both talk and listen at the same time.

Half or

Full-Duplex is required for connection-oriented protocols such as TCP. A duplex

circuit can be created by using two separate physical connections running in

half duplex mode or simplex mode. Two way satellite communications is achieved

using two simplex connections.

Types of Network

The types of

network are categorized on the basis of the number of systems or devices that

are under the networked area. Computer Networking is one of the most important

wings of computing. Networking is the process by which two or more computers

are linked together for a flawless communication. By creating a network,

devices like printers and scanners, software, and files and data that are

stored in the system can be shared. It helps the communication among multiple

computers easy. By computer networking the user access may be restricted when

necessary.

There

three types of networks:

Local

Area Network (LAN):

A local area

network (LAN) is a computer network that connects computers and devices in a

limited geographical area such as home, school, computer laboratory or office

building. The defining characteristics of LANs, in contrast to wide area

networks (WANs), include their usually higher data-transfer rates, smaller

geographic area, and lack of a need for leased telecommunication lines. The

Local Area Network is also referred as LAN. This system spans on a small area

like a small office or home. The computer systems are linked with cables. In

LAN system computers on the same site could be linked.

Wide

Area Network (WAN):

A wide area

network (WAN) is a computer network that covers a broad area (i.e., any network

whose communications links cross metropolitan, regional, or national

boundaries). This is in contrast with personal area networks (PANs), local area

networks (LANs), campus area networks (CANs), or metropolitan area networks

(MANs) which are usually limited to a room, building, campus or specific

metropolitan area (e.g., a city) respectively. A Wide Area Network or WAN is a

type of networking where a number of resources are installed across a large

area such as multinational business. Through WAN offices in different countries

can be interconnected. The best example of a WAN could be the Internet that is

the largest network in the world. In WAN computer systems on different sites

can be linked.

Metropolitan

area network (MAN):

A

metropolitan area network (MAN) is a computer network that usually spans a city

or a large campus. A MAN usually interconnects a number of local area networks

(LANs) using a high-capacity backbone technology, such as fiber-optical links,

and provides up-link services to wide area networks (or WAN) and the Internet.

The IEEE

802-2002 standard describes a MAN as being:

A MAN is optimized for a larger

geographical area than a LAN, ranging from several blocks of buildings to

entire cities. MANs can also depend on communications channels of

moderate-to-high data rates. A MAN might be owned and operated by a single

organization, but it usually will be used by many individuals and organizations. MANs might also be owned and operated as

public utilities. They will often provide means for internetworking of local

networks.

The types of

networks can be further classified into two more divisions:

Peer to peer

Network:

Peer to peer

is an approach to computer networking where all computers share equivalent

responsibility for processing data. Peer-to-peer networking (also known simply

as peer networking) differs from client-server networking, where certain

devices have responsibility for providing or "serving" data and other

devices

Consume or

otherwise act as "clients" of those servers.

Characteristics

of a Peer Network:

Peer to peer

networking is common on small local area networks (LANs), particularly home

networks. Both wired and wireless home networks can be configured as peer to

peer environments.

Computers in

a peer to peer network run the same networking protocols and software. Peer

networks are also often situated physically near to each other, typically in

homes, small businesses or schools. Some peer networks, however, utilize the

Internet and are geographically dispersed worldwide.

Home

networks that utilize broadband routers are hybrid peer to peer and

client-server environments. The router provides centralized Internet connection

sharing, but file, printer and other resource sharing is managed directly

between the local computers involved.

Peer

to Peer and P2P Networks:

Internet-based

peer to peer networks emerged in the 1990s due to the development of P2P file

sharing networks like Napster. Technically, many P2P networks (including the

original Napster) are not pure peer networks but rather hybrid designs as they

utilize central servers for some functions such as search.

Peer

to Peer and Ad Hoc Wi-Fi Networks

Wi-Fi

wireless networks support so-called ad hoc connections between devices. Ad hoc

Wi-Fi networks are pure peer to peer compared to those utilizing wireless

routers as an intermediate device.

You can

configure computers in peer to peer workgroups to allow sharing of files,

printers and other resources across all of the devices. Peer networks allow

data to be shared easily in both directions, whether for downloads to your

computer or uploads from your computer.

On the

Internet, peer to peer networks handle a very high volume of file sharing

traffic by distributing the load across many computers. Because they do not

rely exclusively on central servers, P2P networks both scale better and are

more resilient than client-server networks in case of failures or traffic

bottlenecks.

Client

Server Networks

The term

client-server refers to a popular model for computer networking that utilizes

client and server devices each designed for specific purposes. The

client-server model can be used on the Internet as well as local area networks

(LANs). Examples of client-server systems on the Internet include Web browsers

and Web servers, FTP clients and servers, and DNS.

Client

and Server Devices:

Client/server

networking grew in popularity many years ago as personal computers (PCs) became

the common alternative to older mainframe computers. Client devices are

typically PCs with network software applications installed that request and

receive information over the network. Mobile devices as well as desktop

computers can both function as clients. A server device typically stores files

and databases including more complex applications like Web sites. Server

devices often feature higher-powered central processors, more memory, and larger

disk drives than clients.

Client-Server

Applications:

The

client-server model distinguishes between applications as well as devices.

Network clients make requests to a server by sending messages, and servers

respond to their clients by acting on each request and returning results. One

server generally supports numerous clients, and multiple servers can be

networked together in a pool to handle the increased processing load as the

number of clients grows.

A client

computer and a server computer are usually two separate devices, each

customized for their designed purpose. For example, a Web client works best

with a large screen display, while a Web server does not need any display at

all and can be located anywhere in the world. However, in some cases a given

device can function both as a client and a server for the same application.

Likewise, a device that is a server for one application can simultaneously act

as a client to other servers, for different applications.

[Some of the

most popular applications on the Internet follow the client-server model

including email, FTP and Web services. Each of these clients features a user

interface (either graphic- or text-based) and a client application that allows

the user to connect to servers. In the case of email and FTP, users enter a

computer name (or sometimes an IP address) into the interface to set up

connections to the server.

Local

Client-Server Networks:

Many home

networks utilize client-server systems without even realizing it. Broadband

routers, for example, contain DHCP servers that provide IP addresses to the

home computers (DHCP clients). Other types of network servers found in home

include print servers and backup servers.

Client-Server

vs Peer-to-Peer and Other Models:

The

client-server model was originally developed to allow more users to share

access to database applications. Compared to the mainframe approach,

client-server offers improved scalability because connections can be made as

needed rather than being fixed. The client-server model also supports modular

applications that can make the job of creating software easier. In so-called

"two-tier" and "three-tier" types of client-server systems,

software applications are separated into modular pieces, and each piece is

installed on clients or servers specialized for that subsystem.

Client-server

is just one approach to managing network applications The primary alternative,

peer-to-peer networking, models all devices as having equivalent capability

rather than specialized client or server roles. Compared to client-server, peer

to peer networks offer some advantages such as more flexibility in growing the

system to handle large number of clients. Client-server networks generally

offer advantages in keeping data secure.

Network topology

Network

topology is the layout pattern of interconnections of the various elements

(links, nodes, etc.) of a computer or biological network. Network topologies

may be physical or logical. Physical topology refers to the physical design of

a network including the devices, location and cable installation. Logical

topology refers to how data is actually transferred in a network as opposed to

its physical design. In general physical topology relates to a core network

whereas logical topology relates to basic network.

Topology can

be understood as the shape or structure of a network. This shape does not

necessarily correspond to the actual physical design of the devices on the

computer network. The computers on a home network can be arranged in a circle

but it does not necessarily mean that it represents a ring topology.

Any

particular network topology is determined only by the graphical mapping of the

configuration of physical and/or logical connections between nodes. The study

of network topology uses graph theory. Distances between nodes, physical

interconnections, transmission rates, and/or signal types may differ in two

networks and yet their topologies may be identical.

A local area

network (LAN) is one example of a network that exhibits both a physical

topology and a logical topology. Any given node in the LAN has one or more

links to one or more nodes in the network and the mapping of these links and

nodes in a graph results in a geometric shape that may be used to describe the

physical topology of the network. Likewise, the mapping of the data flow

between the nodes in the network determines the logical topology of the

network. The physical and logical topologies may or may not be identical in any

particular network.

Bus

Topology:

In local

area networks where bus topology is used, each node is connected to a single

cable. Each computer or server is connected to the single bus cable. A signal

from the source travels in both directions to all machines connected on the bus

cable until it finds the intended recipient. If the machine address does not

match the intended address for the data, the machine ignores the data.

Alternatively, if the data does match the machine address, the data is

accepted. Since the bus topology consists of only one wire, it is rather

inexpensive to implement when compared to other topologies. However, the low

cost of implementing the technology is offset by the high cost of managing the

network. Additionally, since only one cable is utilized, it can be the single

point of failure. If the network cable breaks, the entire network will be down.

Advantages

and disadvantages of a bus network:

Advantages

·

Easy to implement and extend.

·

Easy to install.

·

Well-suited for temporary or small networks not

requiring high speeds (quick setup), resulting in faster networks.

·

less expensive than other topologies (But in

recent years has become less important due to devices like a switch)

·

Cost effective; only a single cable is used.

·

Easy identification of cable faults.

Disadvantages

·

Limited cable length and number of stations.· If there is a problem with the cable, the entire network breaks down.

· Maintenance costs may be higher in the long run.

· Performance degrades as additional computers are added or on heavy traffic (shared bandwidth).

· Proper termination is required (loop must be in closed path).

· Significant Capacitive Load (each bus transaction must be able to stretch to most distant link).

· It works best with limited number of nodes.

· Commonly has a slower data transfer rate than other topologies.

· Only one packet can remain on the bus during one clock pulse

Star

Topology:

Star

networks are one of the most common computer network topologies. In its

simplest form, a star network consists of one central switch, hub or computer,

which acts as a conduit to transmit messages. This consists of a central node,

to which all other nodes are connected; this central node provides a common

connection point for all nodes through a hub. Thus, the hub and leaf nodes, and the

transmission lines between them, form a graph with the topology of a star. If

the central node is passive, the originating node must be able to tolerate the

reception of an echo of its own transmission, delayed by the two-way

transmission time (i.e. to and from the central node) plus any delay generated

in the central node. An active star network has an active central node that

usually has the means to prevent echo-related problems.

The star

topology reduces the chance of network failure by connecting all of the systems

to a central node. When applied to a bus-based network, this central hub

rebroadcasts all transmissions received from any peripheral node to all

peripheral nodes on the network, sometimes including the originating node. All

peripheral nodes may thus communicate with all others by transmitting to, and

receiving from, the central node only. The failure of a transmission line

linking any peripheral node to the central node will result in the isolation of

that peripheral node from all others, but the rest of the systems will be

unaffected.

It is also

designed with each node (file servers, workstations, and peripherals) connected

directly to a central network hub, switch, or concentrator.

Data on a

star network passes through the hub, switch, or concentrator before continuing

to its destination. The hub, switch, or concentrator manages and controls all

functions of the network. It is also acts as a repeater for the data flow. This

configuration is common with twisted pair cable. However, it can also be used

with coaxial cable or optical fiber cable.

Advantages

·

Better performance: star topology prevents the

passing of data packets through an excessive number of nodes. At most, 3

devices and 2 links are involved in any communication between any two devices.

Although this topology places a huge overhead on the central hub, with adequate

capacity, the hub can handle very high utilization by one device without

affecting others.

·

Isolation of devices: Each device is inherently

isolated by the link that connects it to the hub. This makes the isolation of

individual devices straightforward and amounts to disconnecting each device

from the others. This isolation also prevents any non-centralized failure from

affecting the network.

·

Benefits from centralization: As the central hub

is the bottleneck, increasing its capacity, or connecting additional devices to

it, increases the size of the network very easily. Centralization also allows

the inspection of traffic through the network. This facilitates analysis of the

traffic and detection of suspicious behavior.

·

Easy to detect faults and to remove parts.

·

No disruptions to the network when connecting or

removing devices.

Disadvantages

·

High dependence of the system on the functioning

of the central hub

·

Failure of the central hub renders the network

inoperable

Ring

Topology:

A ring

network is a network topology in which each node connects to exactly two other

nodes, forming a single continuous pathway for signals through each node - a

ring. Data travels from node to node, with each node along the way handling

every packet. Because a ring topology provides only one pathway between any two

nodes, ring networks may be disrupted by the failure of a single link.[1] A

node failure or cable break might isolate every node attached to the ring.

Fiber

Distributed Data Interface(FDDI) networks overcome this vulnerability by

sending data on a clockwise and a counterclockwise ring: in the event of a

break data is wrapped back onto the complementary ring before it reaches the

end of the cable, maintaining a path to every node along the resulting

"C-Ring". Many ring networks add a "counter-rotating ring"

to form a redundant topology. Such "dual ring" networks include

Spatial Reuse Protocol, Fiber Distributed Data Interface (FDDI), and Resilient

Packet Ring.

Advantages

·

Very orderly network where every device has

access to the token and the opportunity to transmit

·

Performs better than a bus topology under heavy

network load

·

Does not require network server to manage the

connectivity between the computers

Disadvantages

·

One malfunctioning workstation can create

problems for the entire network

·

Moves, adds and changes of devices can affect

the network

·

Network adapter cards much more expensive than

Ethernet cards and hubs

·

Much slower than an Ethernet network under

normal load

Tree

Topology:

The type of

network topology in which a central 'root' node (the top level of the

hierarchy) is connected to one or more other nodes that are one level lower in

the hierarchy (i.e., the second level) with a point-to-point link between each

of the second level nodes and the top level central 'root' node, while each of

the second level nodes that are connected to the top level central 'root' node

will also have one or more other nodes that are one level lower in the

hierarchy (i.e., the third level) connected to it, also with a point-to-point

link, the top level central 'root' node being the only node that has no other

node above it in the hierarchy (The hierarchy of the tree is symmetrical.) Each

node in the network having a specific fixed number, of nodes connected to it at

the next lower level in the hierarchy, the number, being referred to as the

'branching factor' of the hierarchical tree. This tree has individual

peripheral nodes.

A network

that is based upon the physical hierarchical topology must have at least three

levels in the hierarchy of the tree, since a network with a central 'root' node

and only one hierarchical level below it would exhibit the physical topology of

a star.

A network

that is based upon the physical hierarchical topology and with a branching

factor of 1 would be classified as a physical linear topology.

The

branching factor, f, is independent of the total number of nodes in the network

and, therefore, if the nodes in the network require ports for connection to

other nodes the total number of ports per node may be kept low even though the

total number of nodes is large – this makes the effect of the cost of adding

ports to each node totally dependent upon the branching factor and may

therefore be kept as low as required without any effect upon the total number

of nodes that are possible.

The total

number of point-to-point links in a network that is based upon the physical

hierarchical topology will be one less than the total number of nodes in the

network.

If the nodes

in a network that is based upon the physical hierarchical topology are required

to perform any processing upon the data that is transmitted between nodes in

the network, the nodes that are at higher levels in the hierarchy will be

required to perform more processing operations on behalf of other nodes than

the nodes that are lower in the hierarchy. Such a type of network topology is

very useful and highly recommended.

Tree

topology advantages:

·

It is the best topology for a large computer

network for which a star topology or ring topology are unsuitable due to the

sheer scale of the entire network. Tree topology divides the whole network into

parts that are more easily manageable.

·

Tree topology makes it possible to have a point

to point network.

·

All computers have access to their immediate

neighbors in the network and and also the central hub. This kind of network

makes it possible for multiple network devices to be connected with the central

hub.

·

It overcomes the limitation of star network

topology, which has a limitation of hub connection points and the broadcast

traffic induced limitation of a bus network topology.

·

A tree network provides enough room for future

expansion of a network.

Tree

topology disadvantages:

·

Dependence of the entire network on one central

hub is a point of vulnerability for this topology. A failure of the central hub

or failure of the main data trunk cable, can cripple the whole network.

·

With increase in size beyond a point, the

management becomes difficult.

Mesh

Topology:

Mesh networking

(topology) is a type of networking where each node must not only capture and

disseminate its own data, but also serve as a relay for other sensor nodes,

that is, it must collaborate to propagate the data in the network.

The

self-healing capability enables a routing based network to operate when one

node breaks down or a connection goes bad. As a result, the network is

typically quite reliable, as there is often more than one path between a source

and a destination in the network. Although mostly used in wireless scenarios,

this concept is also applicable to wired networks and software interaction.

Advantages

of Mesh Topology

·

There are dedicated links used in the topology,

which guarantees, that each connection is able to carry its data load, thereby

eliminating traffic problems, which are common, when links are shared by

multiple devices.

·

It is a robust topology. When one link in the

topology becomes unstable, it does not cause the entire system to halt.

·

If the network is to be expanded, it can be done

without causing any disruption to current users of the network.

·

It is possible to transmit data, from one node

to a number of other nodes simultaneously

·

Troubleshooting, in case of a problem, is easy

as compared to other network topologies.

·

This topology ensures data privacy and security,

as every message travels along a dedicated link.

Disadvantages

of Mesh Topology

·

The first disadvantage of this topology is that,

it requires a lot more hardware (cables, etc.) as compared to other Local Area

Network (LAN) topologies.

·

The implementation (installation and

configuration) of this topology is very complicated and can get very messy. A large

number of Input / Outout (I/O) ports are required.

·

It is an impractical solution, when large number

of devices are to be connected to each other in a network.

·

The cost of installation and maintenance is

high, which is a major deterrent.

Transmission Media

Various

physical media can be used to transport a stream of bits from one device to

another. Each has its own characteristics in terms of bandwidth, propagation

delay, cost, and ease of installation and maintenance. Media can be generally classified

as guided (e.g. copper and fiber cable) and unguided (wireless) media. The main

categories of transmission media used in data communications networks. Some

Bound Media are Coaxial Cable, Twisted Pair cable and Optical Fiber Cable.

Coaxial cable:

Coaxial cable:

A coaxial

cable has a central copper wire core, surrounded by an insulating (dielectric)

material. Braided metal shielding surrounds the dielectric and helps to absorb

unwanted external signals (noise), preventing it from interfering with the data

signal travelling along the core. A plastic sheath protects the cable from

damage. A terminating resistor is used at each end of the cable to prevent

transmitted signals from being reflected back down the cable. The following

diagram illustrates the basic construction of a coaxial cable.

Construction

of coaxial cable

Coaxial

cable has a fairly high degree of immunity to noise, and can be used over

longer distances (up to 500 meters) than twisted pair cable. Coaxial cable has,

in the past, been used to provide network backbone cable segments. Coaxial

cable has largely been replaced in computer networks by optical fiber and

twisted pair cable, with fiber used in the network backbone, and twisted pair

used to connect workstations to network hubs and switches.

Thick net

cable (also known as 10Base5) is a fairly thick cable (0.5 inches in diameter).

The 10Base5 designation refers to the 10 Mbps maximum data rate , baseband

signaling and 500 meter maximum segment length . Thick net was the original

transmission medium used in Ethernet networks, and supported up to 100 nodes per

network segment. An Ethernet transceiver was connected to the cable using a

vampire tap , so called because it clamps onto the cable, forcing a spike

through the outer shielding to make contact with the inner conductor, while two

smaller sets of teeth bite into the outer conductor. Transceivers could be

connected to the network cable while the network was live. A separate drop

cable with an attachment unit interface (AUI) connector at each end connected

the transceiver to the network interface card in the workstation (or other

network device). The drop cable was typically a shielded twisted pair cable,

and could be up to 50 meters in length. The minimum cable length between

connections ( taps ) on a cable segment was 2.5 meters.

Thick net

(10Base5) coaxial cable

Thin net

cable (also known as 10Base2) is thinner than Thick net (approximately 0.25

inches in diameter) and as a consequence is cheaper and far more flexible. The

10Base2 designation refers to the 10 Mbps maximum data rate , baseband

signaling and 185 (nearly 200) meter maximum segment length . A T-connector is

used with two BNC connectors to connect the network segment directly to the

network adapter card. The length of cable between stations must be at least 50

centimeters, and Thin net can support up to 30 nodes per network segment.

Thin net

(10Base2) coaxial cable

Coaxial

cable has the following advantages and disadvantages:

Advantages

·

Highly resistant to EMI (electromagnetic

interference)

·

Highly resistant to physical damage

Disadvantages

·

Expensive

·

Inflexible construction (difficult to install)

·

Unsupported by newer networking standards

Twisted

pair cable:

Twisted pair

copper cable is still widely used, due to its low cost and ease of

installation. A twisted pair consists of two insulated copper cables, twisted

together to reduce electrical interference between adjacent pairs of wires.

This type of cable is still used in the subscriber loop of the public telephone

system (the connection between a customer and the local telephone exchange),

which can extend for several kilometers without amplification. The subscriber

loop is essentially an analogue transmission line, although twisted pair cables

are also be used in computer networks to carry digital signals over short

distances.

The

bandwidth of twisted pair cable depends on the diameter of wire used, and the

length of the transmission line. The type of cable currently used in local area

networks has four pairs of wires. Until recently, category 5 or category 5E

cable has been used, but category 6 is now used for most new installations. The

main difference between the various categories is in the data rate supported -

category 6 cable will support gigabit Ethernet. The main disadvantage of UTP

cables in networks is that, due to the relatively high degree of attenuation

and a susceptibility to electromagnetic interference, high speed digital

signals can only be reliably transmitted over cable runs of 100 metres or less.

Unshielded

twisted pair cable

Shielded

Twisted Pair (STP) cable was introduced in the 1980s by IBM as the recommended

cable for their Token Ring network technology. It is similar to unshielded

twisted pair cable except that each pair is individually foil shielded, and the

cable has a braided drain wire that is earthed at one end during installation.

The popularity of STP has declined for the following reasons:

High cost of

cable and connectors

More

difficult to install than UTP

Ground loops

can occur if incorrectly installed

There is

still a cable length limitation of 100 meters

Shielded

twisted pair cable

Advantages

·

It is a thin, flexible cable that is easy to

string between walls.

·

More lines can be run through the same wiring

ducts.

·

UTP costs less per meter/foot than any other

type of LAN cable.

·

Electrical noise going into or coming from the

cable can be prevented.

·

Cross-talk is minimized.

Disadvantages

·

Twisted pair’s susceptibility to electromagnetic

interference greatly depends on the pair twisting schemes (usually patented by

the manufacturers) staying intact during the installation. As a result, twisted

pair cables usually have stringent requirements for maximum pulling tension as

well as minimum bend radius. This relative fragility of twisted pair cables

makes the installation practices an important part of ensuring the cable’s

performance.

·

In video applications that send information

across multiple parallel signal wires, twisted pair cabling can introduce

signaling delays known as skew which results in subtle color defects and

ghosting due to the image components not aligning correctly when recombined in

the display device. The skew occurs because twisted pairs within the same cable

often use a different number of twists per meter so as to prevent common-mode

crosstalk between pairs with identical numbers of twists. The skew can be

compensated by varying the length of pairs in the termination box, so as to

introduce delay lines that take up the slack between shorter and longer pairs,

though the precise lengths required are difficult to calculate and vary

depending on the overall cable length.

·

Optical

fiber:

Optical

fibers are thin, solid strands of glass that transmit information as pulses of

light. The fiberd has a core of high-purity glass, between 6μm and 50μm in

diameter, down which the light pulses travel. The core is encased in a covering

layer made of a different type of glass, usually about 125 μm in diameter,

known as the cladding. An outer plastic covering, the primary buffer, provides

some protection, and takes the overall diameter to about 250 μm. The structure

of an optical fiber is shown below.

The basic

construction of an optical fiber

The cladding

has a slightly lower refractive index than the core (typical values are 1.47

and 1.5 respectively), so that as the pulses of light travel along the fiber

they are reflected back into the core each time they meet the boundary between

the core and the cladding. Optical fibers lose far less of its signal energy

than copper cables, and can be used to transmit signals of a much higher

frequency. More information can be carried over longer distances with fewer

repeaters. The bandwidth achievable using optical fiber is almost unlimited,

but current signaling technology limits the data rate to 1 Gbps due to time required

to convert electronic digital signals to light pulses and vice versa. Digital

data is converted to light pulses by either a light emitting diode (LED) or a

laser diode. Although some light is lost at each end of the fiber, most is

passed along the fiber to the receiver, where the light pulses are converted

back into electronic signals by a photo-detector.

As the ray

passes along the fiber it meets the boundary between the core and the cladding

at some point. Because the refractive index of the cladding is lower than that

of the core, the ray is reflected back into the core material, as long as the

angle of incidence ( θ i ) is greater than the critical angle ( θ c ). The

critical angle depends on the refractive indices of the two materials. In the case

of an optical fiber, the values are chosen so that almost all of the light is

reflected back into the fiber, and there is virtually no loss through the walls

of the fiber. This is called total internal reflection . The critical angle for

a particular fiber can be calculated using Snell's Law. This states that:

n 1 sin θ 1

= n 2 sin θ 2

where θ 1 is

the angle of incidence, θ 2 is the angle of refraction, and n 1 and n 2 are the

refractive indices of the core and cladding respectively. The effect on a ray

of light passing along the fiber is shown below.

Light

transmission in an optical fiber

In

step-index fibers, the refractive index of both the core and the cladding has a

constant value, so that the refractive index of the fiber steps from one value

to the next.

A step-index

fiber

A multi-mode

step-index fiber

Multi-mode

and mono-mode fibers

One way to

improve the performance of multi-mode fiber is to use a graded index fiber

instead of a step index fiber. The refractive index of this type of fiber varies

across the diameter of the core in such a way that light is made to follow a

curved path along the fiber (see below). Light near the edges of the core

travels faster than light at the centre of the core, so although some rays

follow a longer path than others, they all tend to arrive at the same time,

resulting in far less modal dispersion than would occur in a step-index

multi-mode fiber.

A graded

index fiber

Light paths

in a graded index fiber

Advantages

·

Better security - very hard to tap a fibre

without being noticed.

·

Longer cable runs

·

Greater bandwidth.

·

Not affected by electromagnetic interference.

·

Can connect between buildings with different

earth potentials. These would could cause problems with a copper wired system.

·

Not effected by near-miss lightning strikes

·

Lower cost for 2 to 3 km fibre runs. CAT5

twisted-pair is limited to 100 metres.

·

Carrier signals on different frequencies

(colours) can be used to increase the capacity (frequency division multiplexing).

·

Single mode or monomode fibre has a very thin

inner glass layer.

·

This is so thin that it behaves like a wave

guide and the light can't follow different paths.

·

This reduces the dispersion and makes longer

fibres possible. 30km.

Disadvantages

·

Attenuation is still a problem and this limits

the maximum cable length

·

Dispersion is still a problem and this also

limits the maximum cable length

·

Scattering occurs when there are imperfections

in the fibre. This causes attenuation or energy loss.

·

Higher installation cost for small networks. For

major backbones fibre works out cheaper per megabit of bandwidth.

·

Optical fibres can be fragile although they are

reinforced with kevlar fibres and an outer protective plastic layer

·

Optical fibres are difficult to connect to the

transmitting light source and the receiving light detector. A complex cutting

and polishing operation is needed to make the fibre ends flat and free from

dirt or imperfections.

Unbound

Media or Unguided Media:

Unguided

Media: It is one that does not guide the data signals instead it uses the

multiple paths for transmitting data signals. In this type the data cable are

not bounds to a cable media. So it is called “Unbound media” basically there

are 2 types.

a)

Microwave

b)

Satellite Technology.

a)

Microwave:

Microwaves

are radio waves that are used to provide high-speed transmission. Both voice

and data can be transmitted through microwave. Data is transmitted through the

air form one microwave station to other similar to radio signals.

Microwave

uses line-of-sight transmission. It means that the signals travel in straight

path and cannot bend. Microwave stations or antennas are usually installed on

high towers or buildings. Microwave stations are placed within 20 to 30 miles

to each other. Each station receives signal from previous station and transfer

to next station. In this way, data transferred from one place to another. There

should be no buildings on mountains between microwave stations.

Advantages:

1. It has

the medium capacity slightly higher than “Bound Media”

2. Medium

cost.

3. It can

cover longer distance that cannot be possible by bound media.

Disadvantages:

1. Noise

interference is more.

2. Since it

uses less susceptible signal so it has got greater influence from rain &

fog.

3. It is not

secure & reliable.

b)

Satellite Communication:

Communication

satellite is a space station. It receives microwave signals from earth station.

It amplifies the signal and retransmits them back to earth. Communication

satellite is established in space about 22,300 miles above the earth. The data

transfer speed of communication satellite is very high.

The

transmission from earth station to satellite is called uplink. The transmission

from satellite to earth station is called downlink. An important advantage at

satellite is that a large volume of data can be communicated at once. The

disadvantage is that bad weather can severely affect the quality of satellite

transmission.

Advantages:

1. Low cost

per user (for pay TV)

2. High

Capacity

3. Very

large coverage area.

Disadvantages:

1. High

Installing & managing cost.

2. Receive

dishes & decoders required.

3. Delays

involved in the reception of the signal.

Wireless

Media:

Wireless

telecommunications, is the transfer of information between two or more points

that are physically not connected. Distances can be short, as a few meters as

in television remote control; or long ranging from thousands to millions of

kilometers for deep-space radio communications. It encompasses various types of

fixed, mobile, and portable two-way radios, cellular telephones, personal

digital assistants (PDAs), and wireless networking. Other examples of wireless

technology include GPS units, garage door openers and or garage doors, wireless

computer mice, keyboards and headsets, satellite television and cordless

telephones.

Device

Network Connecting Device: (Modem, NIC, Switch / Hub, Router, Gateway, Repeater,

Bluetooth, IR, WiFi):

Computer

networking devices are units that mediate data in a computer network. Computer

networking devices are also called network equipment, Intermediate Systems (IS)

or InterWorking Unit (IWU). Units which are the last receiver or generate data

are called hosts or data terminal equipment.

Modem

A modem

(modulator-demodulator) is a device that modulates an analog carrier signal to

encode digital information, and also demodulates such a carrier signal to

decode the transmitted information. The goal is to produce a signal that can be

transmitted easily and decoded to reproduce the original digital data. Modems

can be used over any means of transmitting analog signals, from light emitting

diodes to radio. The most familiar example is a voice band modem that turns the

digital data of a personal computer into modulated electrical signals in the

voice frequency range of a telephone channel. These signals can be transmitted

over telephone lines and demodulated by another modem at the receiver side to

recover the digital data.

Modems are

generally classified by the amount of data they can send in a given unit of

time, usually expressed in bits per second (bit/s, or bps). Modems can

alternatively be classified by their symbol rate, measured in baud. The baud

unit denotes symbols per second, or the number of times per second the modem

sends a new signal. For example, the ITU V.21 standard used audio

frequency-shift keying, that is to say, tones of different frequencies, with

two possible frequencies corresponding to two distinct symbols (or one bit per

symbol), to carry 300 bits per second using 300 baud. By contrast, the original

ITU V.22 standard, which was able to transmit and receive four distinct symbols

(two bits per symbol), handled 1,200 bit/s by sending 600 symbols per second

(600 baud) using phase shift keying.

Network

interface controller(NIC)

A network

interface controller (also known as a network interface card, network adapter,

LAN adapter and by similar terms) is a computer hardware component that

connects a computer to a computer network.

Whereas

network interface controllers were commonly implemented on expansion cards that

plug into a computer bus, the low cost and ubiquity of the Ethernet standard

means that most newer computers have a network interface built into the

motherboard.

The network

controller implements the electronic circuitry required to communicate using a

specific physical layer and data link layer standard such as Ethernet, Wi-Fi,

or Token Ring. This provides a base for a full network protocol stack, allowing

communication among small groups of computers on the same LAN and large-scale

network communications through routable protocols, such as IP.

Switch /

Hub

A network

switch or switching hub is a computer networking device that connects network

segments.

The term

commonly refers to a multi-port network bridge that processes and routes data

at the data link layer (layer 2) of the OSI model. Switches that additionally

process data at the network layer (Layer 3) and above are often referred to as

Layer 3 switches or multilayer switches.

The network

switch plays an integral part in most modern Ethernet local area networks

(LANs). Mid-to-large sized LANs contain a number of linked managed switches.

Small office/home office (SOHO) applications typically use a single switch, or

an all-purpose converged device such as a gateway to access small office/home

broadband services such as DSL or cable internet. In most of these cases, the

end-user device contains a router and components that interface to the

particular physical broadband technology. User devices may also include a

telephone interface for VoIP.

An Ethernet

switch operates at the data link layer of the OSI model to create a separate

collision domain for each switch port. With 4 computers (e.g., A, B, C, and D)

on 4 switch ports, A and B can transfer data back and forth, while C and D also

do so simultaneously, and the two conversations will not interfere with one

another. In the case of a hub, they would all share the bandwidth and run in

half duplex, resulting in collisions, which would then necessitate

retransmissions. Using a switch is called microsegmentation. This allows

computers to have dedicated bandwidth on a point-to-point connections to the

network and to therefore run in full duplex without collisions.

Router

A router is

a device that forwards data packets between telecommunications networks,

creating an overlay internetwork. A router is connected to two or more data

lines from different networks. When data comes in on one of the lines, the

router reads the address information in the packet to determine its ultimate

destination. Then, using information in its routing table or routing policy, it

directs the packet to the next network on its journey or drops the packet. A

data packet is typically forwarded from one router to another through networks

that constitute the internetwork until it gets to its destination node.

The most familiar type of routers are home and

small office routers that simply pass data, such as web pages and email,

between the home computers and the owner's cable or DSL modem, which connects

to the Internet (ISP). However more sophisticated routers range from enterprise

routers, which connect large business or ISP networks up to the powerful core

routers that forward data at high speed along the optical fiber lines of the

Internet backbone.

Gateway

A network

gateway is an internetworking system capable of joining together two networks

that use different base protocols. A network gateway can be implemented

completely in software, completely in hardware, or as a combination of both.

Depending on the types of protocols they support, network gateways can operate

at any level of the OSI model.

Because a

network gateway, by definition, appears at the edge of a network, related

capabilities like firewalls tend to be integrated with it. On home networks, a

broadband router typically serves as the network gateway although ordinary

computers can also be configured to perform equivalent functions.

(1) A node

on a network that serves as an entrance to another network. In enterprises, the

gateway is the computer that routes the traffic from a workstation to the

outside network that is serving the Web pages. In homes, the gateway is the ISP

that connects the user to the internet.

In

enterprises, the gateway node often acts as a proxy server and a firewall. The

gateway is also associated with both a router, which use headers and forwarding

tables to determine where packets are sent, and a switch, which provides the

actual path for the packet in and out of the gateway.

(2) A

computer system located on earth that switches data signals and voice signals

between satellites and terrestrial networks.

(3) An

earlier term for router, though now obsolete in this sense as router is

commonly used.

Repeater

A repeater

is an electronic device that receives a signal and retransmits it at a higher

level and/or higher power, or onto the other side of an obstruction, so that

the signal can cover longer distances. The term "repeater" originated

with telegraphy and referred to an electromechanical device used by the army to

regenerate telegraph signals. Use of the term has continued in telephony and

data communications.

In

telecommunication, the term repeater has the following standardized meanings:

An analog

device that amplifies an input signal regardless of its nature (analog or

digital).

A digital

device that amplifies, reshapes, retimes, or performs a combination of any of

these functions on a digital input signal for retransmission. Because repeaters

work with the actual physical signal, and do not attempt to interpret the data

being transmitted, they operate on the Physical layer, the first layer of the

OSI model.

Bluetooth

Bluetooth is

a proprietary open wireless technology standard for exchanging data over short

distances (using short wavelength radio transmissions in the ISM band from

2400-2480 MHz) from fixed and mobile devices, creating personal area networks

(PANs) with high levels of security. Created by telecoms vendor Ericsson in

1994,[1] it was originally conceived as a wireless alternative to RS-232 data

cables. It can connect several devices, overcoming problems of synchronization.

Bluetooth is

managed by the Bluetooth Special Interest Group, which has more than 14,000

member companies in the areas of telecommunication, computing, networking, and

consumer electronics.[2] The SIG oversees the development of the specification,

manages the qualification program, and protects the trademarks.[3] To be

marketed as a Bluetooth device, it must be qualified to standards defined by

the SIG. A network of patents are required to implement the technology and are

only licensed to those qualifying devices; thus the protocol, whilst open, may

be regarded as proprietary.

Infrared

Infrared

(IR) light is electromagnetic radiation with a wavelength longer than that of

visible light, measured from the nominal edge of visible red light at 0.7

micrometers, and extending conventionally to 300 micrometres. These wavelengths

correspond to a frequency range of approximately 1 to 430 THz, and include most

of the thermal radiation emitted by objects near room temperature.

Microscopically, IR light is typically emitted or absorbed by molecules when

they change their rotational-vibrational movements.

Sunlight at

zenith provides an irradiance of just over 1 kilowatt per square meter at sea

level. Of this energy, 527 watts is infrared radiation, 445 watts is visible

light, and 32 watts is ultraviolet radiation.

WiFi

Wi-Fi is a

wireless standard for connecting electronic devices. A Wi-Fi enabled device

such as a personal computer, video game console, smartphone, and digital audio

player can connect to the Internet when within range of a wireless network

connected to the Internet. A single access point (or hotspot) has a range of

about 20 meters indoors. Wi-Fi has a greater range outdoors and multiple

overlapping access points can cover large areas.

"Wi-Fi"

is a trademark of the Wi-Fi Alliance and the term was originally created as a

simpler name for the "IEEE 802.11" standard. Wi-Fi is used by over

700 million people, there are over 4 million hotspots (places with Wi-Fi

Internet connectivity) around the world, and about 800 million new Wi-Fi

devices every year.[citation needed] Wi-Fi products that complete the Wi-Fi

Alliance interoperability certification testing successfully can use the Wi-Fi

CERTIFIED designation and trademark.

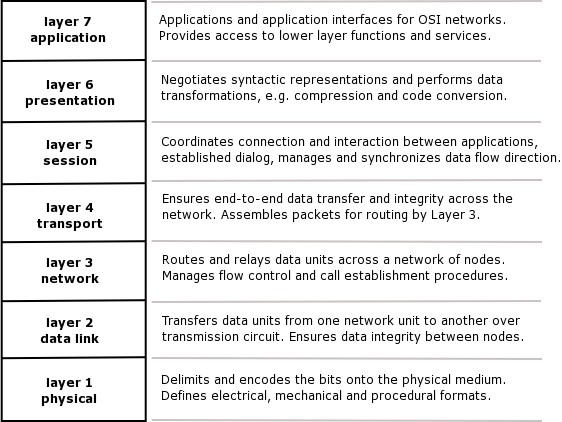

OSI Reference Model

The Open

Systems Interconnection model (OSI model) was a product of the Open Systems

Interconnection effort at the International Organization for Standardization.

It is a way of sub-dividing a communications system into smaller parts called

layers. Similar communication functions are grouped into logical layers. A

layer provides services to its upper layer while receiving services from the

layer below. On each layer, an instance provides service to the instances at

the layer above and requests service from the layer below.

For example,

a layer that provides error-free communications across a network provides the

path needed by applications above it, while it calls the next lower layer to

send and receive packets that make up the contents of that path. Two instances

at one layer are connected by a horizontal connection on that layer.

Layer 1:

Physical Layer

The Physical

Layer defines electrical and physical specifications for devices. In

particular, it defines the relationship between a device and a transmission

medium, such as a copper or optical cable. This includes the layout of pins,

voltages, cable specifications, hubs, repeaters, network adapters, host bus

adapters (HBA used in storage area networks) and more.

To

understand the function of the Physical Layer, contrast it with the functions

of the Data Link Layer. Think of the Physical Layer as concerned primarily with

the interaction of a single device with a medium, whereas the Data Link Layer

is concerned more with the interactions of multiple devices (i.e., at least

two) with a shared medium. Standards such as RS-232 do use physical wires to

control access to the medium.

The major

functions and services performed by the Physical Layer are:

Establishment

and termination of a connection to a communications medium.

Participation

in the process whereby the communication resources are effectively shared among

multiple users. For example, contention resolution and flow control.

Modulation,

or conversion between the representation of digital data in user equipment and

the corresponding signals transmitted over a communications channel. These are

signals operating over the physical cabling (such as copper and optical fiber)

or over a radio link.

Layer 2:

Data Link Layer

The Data

Link Layer provides the functional and procedural means to transfer data

between network entities and to detect and possibly correct errors that may

occur in the Physical Layer. Originally, this layer was intended for

point-to-point and point-to-multipoint media, characteristic of wide area media

in the telephone system. Local area network architecture, which included

broadcast-capable multiaccess media, was developed independently of the ISO

work in IEEE Project 802. IEEE work assumed sublayering and management

functions not required for WAN use. In modern practice, only error detection,

not flow control using sliding window, is present in data link protocols such

as Point-to-Point Protocol (PPP), and, on local area networks, the IEEE 802.2

LLC layer is not used for most protocols on the Ethernet, and on other local

area networks, its flow control and acknowledgment mechanisms are rarely used.

Sliding window flow control and acknowledgment is used at the Transport Layer

by protocols such as TCP, but is still used in niches where X.25 offers

performance advantages.

Layer 3:

Network Layer

The Network

Layer provides the functional and procedural means of transferring variable

length data sequences from a source host on one network to a destination host

on a different network, while maintaining the quality of service requested by

the Transport Layer (in contrast to the data link layer which connects hosts

within the same network). The Network Layer performs network routing functions,

and might also perform fragmentation and reassembly, and report delivery

errors. Routers operate at this layer—sending data throughout the extended

network and making the Internet possible. This is a logical addressing scheme –

values are chosen by the network engineer. The addressing scheme is not

hierarchical.

Careful

analysis of the Network Layer indicated that the Network Layer could have at

least three sublayers:

Subnetwork

Access – that considers protocols that deal with the interface to networks,

such as X.25;

Subnetwork

Dependent Convergence – when it is necessary to bring the level of a

transit network up to the level of networks on either side;

Subnetwork

Independent Convergence – which handles transfer across multiple networks.

The best

example of this latter case is CLNP, or IPv7 ISO 8473. It manages the

connectionless transfer of data one hop at a time, from end system to ingress

router, router to router, and from egress router to destination end system. It

is not responsible for reliable delivery to a next hop, but only for the

detection of erroneous packets so they may be discarded. In this scheme, IPv4

and IPv6 would have to be classed with X.25 as subnet access protocols because

they carry interface addresses rather than node addresses.

A number of

layer management protocols, a function defined in the Management Annex, ISO

7498/4, belong to the Network Layer. These include routing protocols, multicast

group management, Network Layer information and error, and Network Layer

address assignment. It is the function of the payload that makes these belong to

the Network Layer, not the protocol that carries them.

Layer 4:

Transport Layer

The

Transport Layer provides transparent transfer of data between end users,

providing reliable data transfer services to the upper layers. The Transport

Layer controls the reliability of a given link through flow control,

segmentation/desegmentation, and error control. Some protocols are state- and

connection-oriented. This means that the Transport Layer can keep track of the

segments and retransmit those that fail. The Transport layer also provides the

acknowledgement of the successful data transmission and sends the next data if

no errors occurred.

Although not

developed under the OSI Reference Model and not strictly conforming to the OSI

definition of the Transport Layer, typical examples of Layer 4 are the

Transmission Control Protocol (TCP) and User Datagram Protocol (UDP).

Of the

actual OSI protocols, there are five classes of connection-mode transport

protocols ranging from class 0 (which is also known as TP0 and provides the

least features) to class 4 (TP4, designed for less reliable networks, similar

to the Internet). Class 0 contains no error recovery, and was designed for use

on network layers that provide error-free connections. Class 4 is closest to

TCP, although TCP contains functions, such as the graceful close, which OSI

assigns to the Session Layer. Also, all OSI TP connection-mode protocol classes

provide expedited data and preservation of record boundaries, both of which TCP

is incapable.

Layer 5:

Session Layer

The Session

Layer controls the dialogues (connections) between computers. It establishes,

manages and terminates the connections between the local and remote

application. It provides for full-duplex, half-duplex, or simplex operation,

and establishes checkpointing, adjournment, termination, and restart

procedures. The OSI model made this layer responsible for graceful close of

sessions, which is a property of the Transmission Control Protocol, and also

for session checkpointing and recovery, which is not usually used in the

Internet Protocol Suite. The Session Layer is commonly implemented explicitly

in application environments that use remote procedure calls.

Layer 6:

Presentation Layer

The

Presentation Layer establishes context between Application Layer entities, in

which the higher-layer entities may use different syntax and semantics if the

presentation service provides a mapping between them. If a mapping is

available, presentation service data units are encapsulated into session

protocol data units, and passed down the stack.

This layer

provides independence from data representation (e.g., encryption) by

translating between application and network formats. The presentation layer

transforms data into the form that the application accepts. This layer formats

and encrypts data to be sent across a network. It is sometimes called the

syntax layer.[5]

The original

presentation structure used the basic encoding rules of Abstract Syntax

Notation One (ASN.1), with capabilities such as converting an EBCDIC-coded text

file to an ASCII-coded file, or serialization of objects and other data